Hello BRIGHT Run family,

I hope you are enjoying spring.

In some of my recent letters to you, I shared how advanced Artificial Intelligence (AI) models are designed to work with multiple types of data, such as text and audio; image and text; and text, image, audio.

One of those models is called vision-language model (VLM). VLMs can process image and text data together and learn to find associations between them. Put simply, they can analyze images, then describe the images with words.

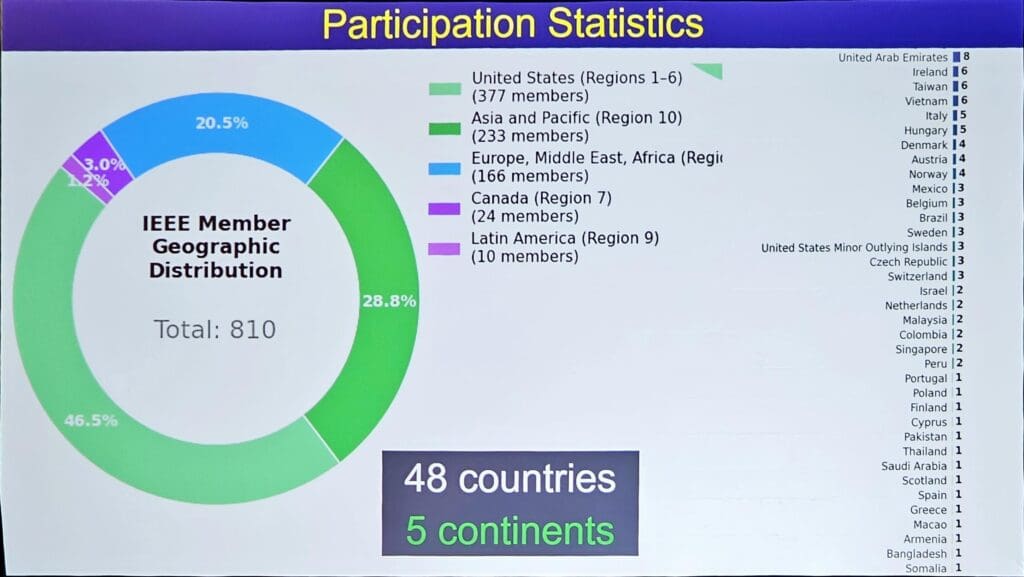

My letter today relates to the first of our two studies, using VLMs for breast imaging, which I recently presented at the International Symposium on Biomedical Imaging (ISBI 2025) held in Houston, Texas. This is a prestigious and widely attended peer-reviewed conference in biomedical imaging.

The first study had two objectives. The first was to determine how existing medical and non-medical VLMs work for categorizing low-suspicious and high-suspicious mammograms. The second objective was to develop our own medical VLM for mammograms.

(A medical VLM understands medical images and text, while a non-medical VLM is less aware of medical images and text, but well aware of the association between general images and text).

We found that existing medical VLMs do not demonstrate enhanced ability in categorizing mammograms compared to non-medical ones. This is especially when tested on newer, unfamiliar mammogram data.

Our own medical VLM performed better than the existing medical and non-medical VLMs in the previously unseen data. This improvement was achieved by using a novel methodology that we developed. Nevertheless, there are opportunities for further improvement by involving experts in another way, which we are working on.

This work was performed in collaboration with Dr. Yimin Yang and his trainees Zhichen Yan and Dr. Wandong Zhang from Western University. The work will appear in the ISBI 2025 conference proceedings.

This conference proved to be great in terms of learning what is going around (enormous growth and new research directions are emerging) while I am busy in my core areas of interest.

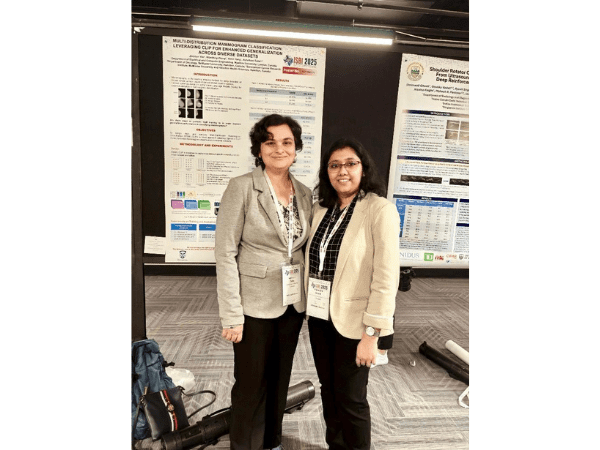

One highlight of the conference:: I met another researcher, Dr. Shrimanti Ghosh, a Postdoctoral Research Scientist at the University of Alberta, and a fellow alumna from my middle school in India! Coincidentally, we were placed one after the other during our presentations. That was a pleasant surprise for both of us!

In my next letter, I will share our second research project and the other special moment from the conference.

Stay well.

Best,

Ashirbani

Dr. Ashirbani Saha is the first holder of the BRIGHT Run Breast Cancer Learning Health System Chair, a permanent research position established by the BRIGHT Run in partnership with McMaster University.